Digital video technology revolutionizes how we capture, process, and share visual content. By converting moving images into digital data, it enables high quality video streaming, editing, storage, and communication across devices powering everything from social media to cinema and virtual meetings.Explore how digital video technology drives modern content from capture and compression to streaming and tomorrow’s breakthroughs. This guide dives deep into formats, workflows, hurdles, and innovations without any fluff.

what is Digital Video Technology

Digital video technology converts in this world scenes into streams of data you can easily share and experience. It all starts with cameras and sensors capturing light, translating it into electrical signals, and digitizing that information into pixels and frames. Recording at 1080p and 30 frames per second is common, striking a balance between clarity and manageable file size. Jumping into 4K or 8K delivers stunning visuals but needs much more storage and processing power.

Compression plays a key role here. Encoding shrinks files by removing data that’s less noticeable to the human eye, while decoding reconstructs that data for playback. This process is essential to manage file size and support in this time streaming.

The core tools include capture devices like DSLRs, smartphones, drones editing software such as Premiere or DaVinci Resolve, codecs that determine how tightly video is packed, and playback systems that deliver it via browsers or apps.

Digital Video Formats and Codecs Explained

Containers like MP4, MOV, MKV, and WebM act as “wrappers” for video data. MP4 is the universal choice, ensuring playback across nearly every device and browser. MOV works seamlessly in Apple centric environments, offering high quality but larger files. MKV excels when you need multi language subtitles and multiple audio tracks, while WebM fits perfectly into the open web ecosystem with its royalty free nature.

Within those containers, codecs such as H.264, H.265 (HEVC), AV1, and VVC compress the video content. H.264 remains the workhorse, balancing quality and compatibility. H.265 offers better compression ratios but comes with licensing obligations. AV1 outshines H.265 in efficiency and is royalty free, though device support is still growing. VVC is even more efficient but hasn’t yet entered mainstream usage.

Understanding the difference between a container and a codec is crucial: containers hold the components including audio and subtitles while codecs determine how those components are packed and unpacked.

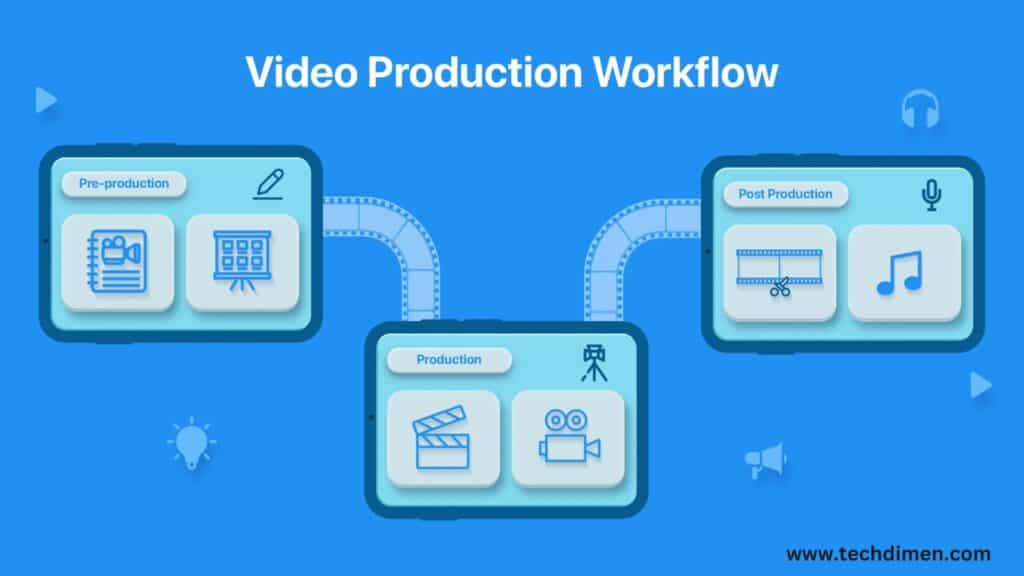

The Video Production Workflow

Creating video content involves several intertwined steps. It starts with acquisition an area that’s become democratized thanks to high quality smartphone and drone cameras capable of capturing 4K HDR footage. For professionals, cinema grade cameras like RED or Sony deliver even higher performance. Simplicity meets functionality with webcams and capture devices like the Logitech Brio or Elgato CamLink, staples among live streamers and remote presenters.

Next comes post production. Editors use non linear platforms such as Premiere Pro, DaVinci Resolve, or Final Cut Pro to cut footage, arrange sequences, and apply effects. Colorists balance and enhance visuals using powerful grading tools while motion graphics artists animate scenes with software like After Effects or Apple Motion. Audio professionals then fine tune soundtracks using techniques like equalization and ducking to ensure clarity and richness.

When it’s time to share, exporting typically involves selecting presets tailored for specific platforms YouTube, Vimeo, or broadcast networks. Two pass encoding ensures optimal quality to size ratios. To accommodate users across a range of devices and connections, creators generate multiple renditions from low res mobile versions to full quality 4K, enabling adaptive bitrate streaming.

Delivery happens through HTML5 players embedded in websites or via native apps. Streaming protocols such as HLS and DASH serve content to Apple and Android ecosystems respectively. Content Delivery Networks (CDNs) like Cloudflare or AWS CloudFront handle distribution, reducing buffering and ensuring a smooth viewing experience.

in this World Applications of Digital Video Technology

Digital video powers everything from entertainment to enterprise functions. Major streaming platforms like Netflix and YouTube convert content into multiple bitrates, ensuring seamless playback. Social apps such as TikTok rely on instant playback and optimized encoding to keep users engaged.

In education, Massive Open Online Courses (MOOCs) host pre recorded videos enriched with transcripts, subtitles, and interactive tools to boost learning. Corporations use similar tactics for training and internal communications, often overlaying branding or interactive elements.

Marketing strategies also lean heavily on video. Brands harness livestream shopping to allow viewers to make purchases in this time, while product demo videos increase conversion by offering clarity and context. Surveillance and health sectors leverage streaming to power in this time alerts and analytics. Smart city cameras, surgical video feeds, and AI driven object detection all rely on digital video tech.

What’s Trending in Digital Video Technology for 2025

Artificial Intelligence is transforming video creation. Tools like Runway ML and Descript let users automatically edit scenes and generate captions. Deepfake detection technologies work behind the scenes to protect content integrity.

Cloud based production is surging ahead. Platforms like Frame.io and Blackmagic Cloud support remote teamwork with in this time proxies and collaborative workflows. Virtual production, using LED backdrops as seen on The Mandalorian, allows filmmakers to shoot immersive scenes without needing location setups.

At the same time, high-efficiency formats like AV1 and VVC enable delivery of ultra high resolution 4K and 8K content with leaner file sizes. HDR formats like HDR10+ and Dolby Vision deliver richer visuals with stunning color and contrast.

Immersive media is also growing fast. AR and VR blur the line between real and digital worlds, while volumetric video captures physical environments, opening up new ways to tell stories in six degrees of freedom.

Challenges in Digital Video Technology

Bandwith constraints continue to challenge live streaming. Delivering in this time video such as sports or e sports while keeping latency under 300 milliseconds still demands robust infrastructure. Businesses rely on edge servers and optimized codecs, although the expense can be steep.

Format fragmentation complicates matters even more. Not every browser or device supports every codec, so creators must transcode videos into multiple versions to ensure every viewer gets a seamless experience.

DRM and licensing regulations add overhead. Streaming services like Hulu or Apple TV+ use systems like FairPlay and Widevine to protect content, while blockchain based rights solutions promise more transparency and fair revenue distribution.

Finally, the environmental impact of video can’t be ignored. Streaming an hour of video typically emits around 100 grams of CO₂. To reduce this, platforms are introducing low carbon playback modes and optimizing data centers to minimize energy use.

The Future of Digital Video Technology

Looking forward, AI will play a bigger role in editing, personalization, and predictive storytelling. Imagine an editor that suggests highlights, builds trailers, or personalizes videos based on what you click or watch longer.

Blockchain is poised to revolutionize rights management. Smart contracts could automatically track ownership and distribute royalties. Every time a clip is used, blockchain logs could ensure creators get paid transparently.

On the experimental side, researchers are exploring quantum and neural compression techniques. These future codecs might surpass today’s best and shrink video data even more without losing quality.

Optimizing Digital Video on the Web

To keep your videos smooth and fast, combine two pass encoding with adaptive bitrate streaming so users always get the best experience their connection allows. Implement lazy loading so videos only play when users scroll to them. Distribute content via CDN to minimize delays and buffering across regions. Think mobile first serve lower res versions to phones and batteries on the go. Always add captions and transcripts they improve accessibility, SEO, and viewer satisfaction.

Certainly! Here’s a rewritten version of the Digital Video Technology FAQs using natural, well structured paragraphs instead of bullet points. This format improves readability and maintains SEO value while sounding more conversational and engaging.

Cloud Video Editing Tools

| Platform | Key Strength | Best For |

|---|---|---|

| Frame.io | Team Collaboration | Post Teams |

| WeVideo | Simple Editing | Teachers, SMBs |

| Blackmagic Cloud | High-End Grading | Film, Studios |

| Canva Video | Drag & Drop Video | Ads, Social |

FAQs

What is digital video technology and how does it work?

Digital video technology refers to the use of digital signals to record, process, store, and distribute video content. Unlike analog systems, digital video breaks footage into binary data, making it easier to edit, compress, stream, and share across platforms. It starts with capturing footage using digital cameras or smartphones, then encoding it using video compression codecs, and finally distributing it online or offline using various digital formats. This entire process from capture to playback relies on advanced digital media workflows, efficient codecs, and hardware compatibility.

Which digital video formats are commonly used today?

Among the most popular digital video formats are MP4, MOV, AVI, and MKV. MP4 remains the most widely used format due to its small file size, broad compatibility, and efficiency for streaming and web publishing. MOV, often preferred for high quality video editing in Apple environments, offers less compression but better quality retention. MKV supports more advanced features like subtitles and multiple audio tracks, which makes it ideal for archiving and storing digital video content.

What is the difference between a codec and a container in video production?

A common point of confusion in digital video technology is the distinction between a codec and a container. A codec is the algorithm used to compress and decompress video data, examples include H.264, H.265, and AV1. A container, on the other hand, is the wrapper that holds video, audio, subtitles, and metadata. Popular containers include MP4, MOV, and MKV. In essence, the codec is responsible for encoding the data, while the container organizes it.

Is AV1 better than H.265 for video streaming?

AV1 is a newer, royalty free codec developed by the Alliance for Open Media. It offers better compression than H.265 (also known as HEVC), meaning you can stream higher quality video at lower bitrates. This is particularly useful for mobile devices and slow internet connections. However, AV1 adoption is still growing, while H.265 remains more widely supported on current hardware and software platforms.

What is the best format for uploading videos to YouTube?

YouTube recommends using the MP4 container format with H.264 video and AAC audio for uploads. This combination offers the best balance between quality and file size. The platform also supports resolutions up to 8K, but most users stick with 1080p or 4K for faster processing and playback.

How can I reduce video file size without losing quality?

Reducing video file size without significant quality loss involves using efficient codecs like H.265 or AV1, lowering the bitrate, and leveraging multi pass encoding during export. Tools such as HandBrake, Adobe Media Encoder, and DaVinci Resolve allow users to tweak these settings for optimal results. Always aim for a bitrate that suits your delivery platform streaming, social media, or storage.

Why is adaptive bitrate streaming important for video playback?

Adaptive bitrate streaming improves the user experience by automatically adjusting video quality inin this time based on the viewer’s internet connection. If bandwidth drops, the system switches to a lower resolution stream to avoid buffering. This method, used in streaming protocols like HLS and MPEG-DASH, ensures smooth, uninterrupted playback regardless of network conditions.

What is non linear editing (NLE) and how is it used in video post production?

Non linear editing (NLE) refers to the method of editing video digitally, allowing editors to access and rearrange any part of the footage without following a linear sequence. This approach contrasts with the traditional tape based linear editing systems of the past. NLE software like Adobe Premiere Pro, Final Cut Pro, and DaVinci Resolve enables modern editors to work flexibly, insert effects, color correct, and refine audio in a seamless timeline based interface.

What are the standard video resolution formats in digital media today?

Video resolution standards vary by platform and content type. Full HD, also known as 1080p (1920×1080 pixels), remains a go to standard for online content. For higher end production, 4K (3840×2160 pixels) has become increasingly common. Ultra high end broadcasts and cinematic productions are beginning to adopt 8K (7680×4320 pixels), although storage and processing demands remain a barrier for many users.

How is AI being used in digital video workflows?

Artificial intelligence is rapidly transforming digital video production. In editing, AI tools can auto generate highlight reels, apply intelligent color grading, transcribe speech to text, and even suggest creative cuts based on pacing and visual interest. Platforms like Adobe Sensei and Runway ML are integrating these features to accelerate editing time while enhancing creativity. In streaming, AI also helps personalize content recommendations and optimize delivery based on user behavior.

Which streaming protocols are used in digital video technology?

Digital video streaming relies on several key protocols. HLS (HTTP Live Streaming), developed by Apple, is one of the most widely used and supports adaptive streaming. MPEG-DASH is another adaptive streaming protocol supported by most modern browsers. RTMP in this Time Messaging Protocol) is still used for pushing live streams to platforms like Facebook Live and YouTube Live, though it’s slowly being replaced by newer technologies like WebRTC for in this time interactions.

What does the future of digital video technology look like?

The future of digital video technology is dynamic and fast evolving. In 2025 and beyond, we can expect increased adoption of AI driven editing platforms, widespread use of 8K and virtual production techniques, and a stronger shift toward cloud based video workflows. Another emerging trend is blockchain based digital rights management, which could change how creators license and monetize content. Green streaming technologies will also rise to address the environmental impact of massive video data transmission.

Do I need to include captions in my videos for accessibility?

Yes, adding captions is essential not just for accessibility compliance under laws like the ADA and WCAG, but also for improving video SEO. Captions allow viewers to watch videos without sound and help search engines understand the video’s content. Most platforms, including YouTube and Vimeo, support auto captioning, but manual editing ensures accuracy.

What’s the best video format for running Facebook or Instagram ads?

For social media ads, MP4 with the H.264 codec is ideal. Keep the aspect ratio optimized for mobile users 1:1 (square) or 9:16 (vertical). Ensure the resolution is high (at least 1080×1080 for square) and that the total file size does not exceed 4GB. A video length of 15 60 seconds tends to perform best across platforms.

Can I edit 4K video on a standard laptop?

Editing 4K video on a laptop is possible, but performance depends on the machine’s specs. You’ll need a system with at least 16GB of RAM, a dedicated graphics card, SSD storage, and a capable CPU. Using proxy editing (lower resolution versions during editing) can help ensure smoother performance in software like DaVinci Resolve or Adobe Premiere Pro.

Final Thoughts

Digital video technology is at the heart of how we share, learn, and entertain in 2025. Whether you’re creating content or managing infrastructure, mastering formats, workflows, tools, and trends gives you a major edge. Ready to dive into specific editing tools, codecs, or streaming strategies? Just say the word I’m here to break it down further.

Jhon AJS is a tech enthusiast and author at Tech Dimen, where he explores the latest trends in technology and TV dimensions. With a passion for simplifying complex topics, Jhon aims to make tech accessible and engaging for readers of all levels.